We discover ourselves at a pivotal second in philanthropy: The alternatives we make about rising applied sciences like synthetic intelligence (AI) proper now may have lasting impacts on the communities we serve.

On the Annenberg Basis, we’re exploring AI’s potential not simply as a software for effectivity, however as a way to advance our mission in a method that’s aligned with our values. We had been led by a curiosity and need to organize our personal organizations for AI use and its affect in addition to a need to then decide if we may share our learnings with our different valued colleagues within the sector.

Grounding AI in Values and Operational Excellence

Our exploration of AI over the previous months has been rooted in a easy perception: Earlier than we may start to assist and even lead, we would have liked to pay attention and be taught.

To that finish, the Basis started by working with one of many co-authors of this piece (Chantal), the previous govt director of the Know-how Affiliation of Grantmakers and co-author of the “Accountable AI Framework for Philanthropy.” Then, collectively we made three foundational strikes to higher perceive learn how to begin incorporating AI into our work:

- Listening Classes: Relatively than ask our workers, “how do you wish to use AI?” we explored questions like, “What are the present ache factors in your work?” and “How would you wish to be more practical?” This method allowed us to catalog potential AI use instances that might streamline our work.

- Workers Survey: We carried out a pulse test survey to gauge workers consciousness, considerations, and aspirations in an nameless style. Key findings included the truth that a good portion of workers (63 %) are already experimenting with AI instruments like CoPilot and ChatGPT, primarily in a rudimentary style.

- Values Mapping: Utilizing an interactive Mural board, we held an “AI Pop-Up Breakfast” for workers to translate the ideas of accountable AI into particular practices for our group. For instance, Workers felt strongly that fairness within the realm of AI meant that each one workers have entry to new instruments and the coaching they must be profitable. Workers shared aspirations for AI akin to:

- “AI wants are weighted equitably throughout departments to right-size wants.”

- “Everybody has entry to all instruments irrespective of your job.”

- “We now have the power to protect our distinctive voice as a basis.”

- “Make AI lingo and instruments comprehendible to the common individual.”

- “We’re making our grantee companions conscious that the Basis is using AI instruments.”

Constructing an AI Governance Framework

The analysis above was instrumental in forming our method to AI governance on the Basis.

Like a number of peer foundations, we’ve got chosen to manipulate AI throughout the context of knowledge at our group by making a Information Use Coverage that additionally incorporates AI governance. Creating our coverage concerned classifying our information into sensitivity classes. It additionally integrated the values recognized by workers throughout our values mapping train proven above.

The ensuing Information Utilization Coverage is greater than only a set of tips; it’s a dwelling doc that displays how we as a basis maintain ourselves answerable for safeguarding the info of our nonprofit companions and our establishment when utilizing all types of know-how, together with each predictive and generative AI.

In the event you’re simply getting began your self, we advocate creating an AI (or Information) Utilization Coverage that identifies, at a minimal, three issues:

- Classification of knowledge as public, non-sensitive, and delicate/confidential

- Permitted AI instruments for every information sort

- Duties of the AI consumer to test accuracy, anonymize enter information, and disclose utilization

Collaborating with Our Nonprofit Companions

Past our group, we acknowledged early that our nonprofit companions additionally search to streamline and innovate with advances in generative AI. Nevertheless, somewhat than instantly providing AI grants or coaching, we once more selected to pay attention first, a choice that unearthed essential variations between basis workers and grantees.

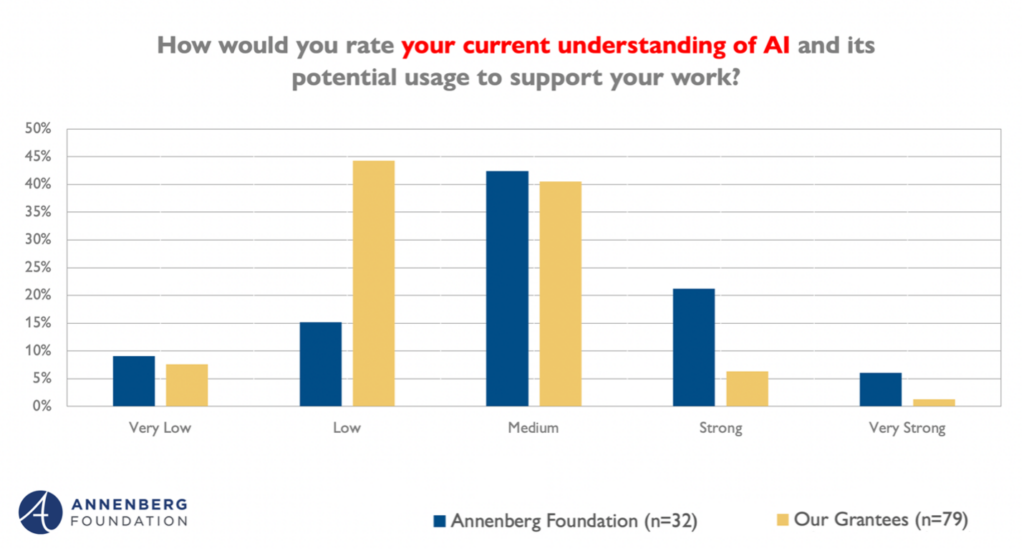

In surveying our grantees, we used a number of of the identical questions requested in our workers survey famous above. For instance, we requested:

- How would you charge your present understanding of AI and its potential utilization to help your work?

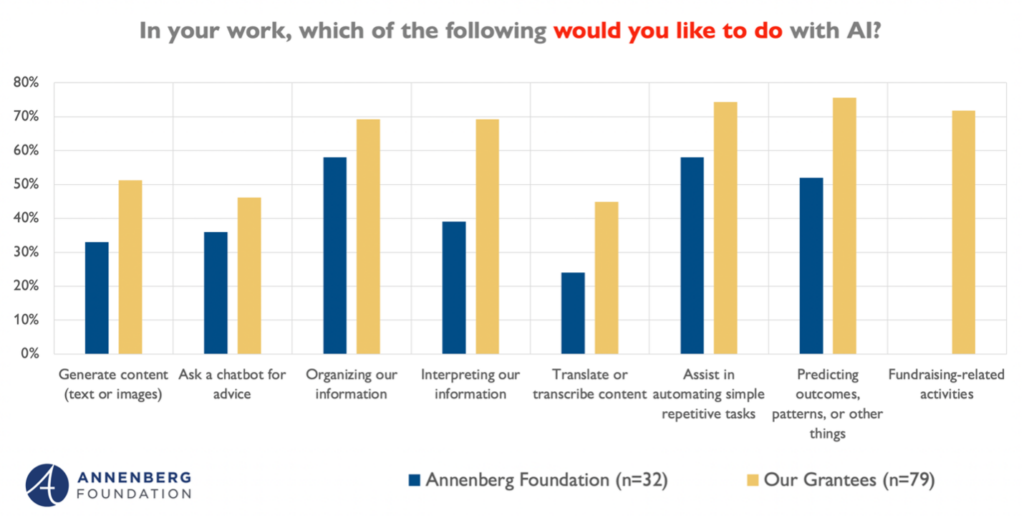

- In your work, which of the next would you love to do with AI?

Apparently, whereas basis workers reported the next diploma of AI understanding than nonprofits, they exhibited much less urgency in figuring out future functions of AI. Nonprofits, in distinction, see a extra speedy and sensible want for AI in practically each space of potential software, particularly fundraising.

This distinction in perspective has been an important reminder for us of the privilege that philanthropy holds in being much less susceptible to the pressures of effectivity and competitors for assets.

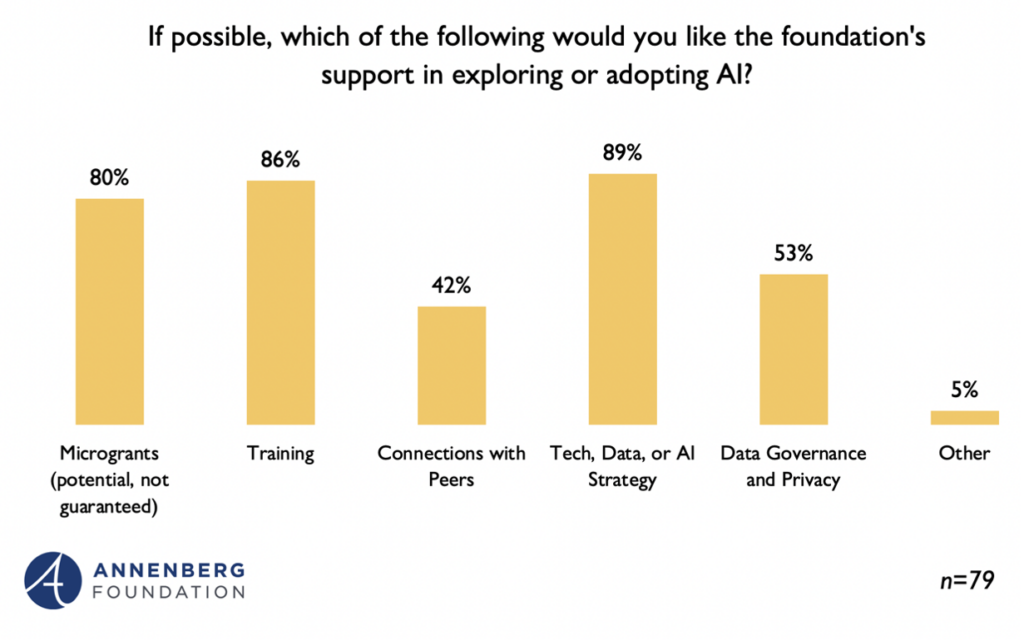

Extra survey questions revealed that, somewhat than a easy grant for AI experimentation, our nonprofits appear to favor a mixture of funding alongside skill-building, and help for technique improvement.

Given these outcomes, we’ve begun tailoring our AI grantmaking efforts to higher align with the sensible wants of our grantees, exploring how AI can be utilized to automate repetitive duties and improve info processing throughout the wider context of know-how technique and skill-building.

Wanting Forward: Sharing Our Classes and Persevering with the Dialog

Wanting forward, we’re excited to launch our AI pilot tasks. At the moment, we’ve got supplied licenses to ChatGPT Groups for all workers with two further pilots beneath evaluate by our Information Governance Committee:

- Picture and Doc Repository Classification and Search: A major ache level for workers throughout a number of departments that could possibly be addressed by off-the-shelf AI options.

- HelpDesk GPT and Onboarding GPT: Inner-facing personalized chatbots to streamline worker help and onboarding.

As we transfer ahead, we’re dedicated to sharing the teachings we’ve discovered — each the successes and the challenges. We consider that by being open about our journey, we may also help foster a tradition of collaborative studying within the philanthropic sector.

Navigating AI shouldn’t be a solitary exercise. Earlier this summer season, we hosted an AI Peer Change with the Know-how Affiliation of Grantmakers (TAG), the place we mentioned the accountable implementation of AI alongside the Gates Basis, Kalamazoo Neighborhood Basis, and the Rockefeller Archive Heart. Over the previous a number of months alone, we’ve shared and collaborated with practically 400 foundations at AI-related occasions with the Communications Community, the Council of Michigan Foundations, and the Council on Foundations and extra.

Our hope is that by way of change and peer studying, we are able to navigate the complexities of AI collectively and make sure that rising know-how is used to profit the communities we serve. We hope you’ll be a part of us.

Cinny Kennard is govt director of the Annenberg Basis. Discover her on LinkedIn. Chantal Forster is AI technique resident on the Annenberg Basis. Discover her on LinkedIn.

Editor’s Observe: CEP publishes a spread of views. The views expressed listed here are these of the authors, not essentially these of CEP.